Google Maps

Discovered ways to improve Google Maps via various usability tests

Overview

Google Maps is a well-established product that assists people with navigation. As part of my Usability Evaluation course, 3 methods were used to analyze its website. Synthesized findings revealed what worked well and what could be improved.

- Team: 3 Designers (1 Moderator, and 1 to log Qualitative & Quantitative metrics respectively)

- Role: Heuristic evaluation, Task construction, Conducting Usability Tests, Assist recording metrics

- Timeline: 1 month (Feb '18)

Goal

College students heavily rely upon navigation to get to places such as campus and work. The purpose of these tests is evaluate the usability of the Google Maps website and see how helpful it is for college students.

Users & Audience

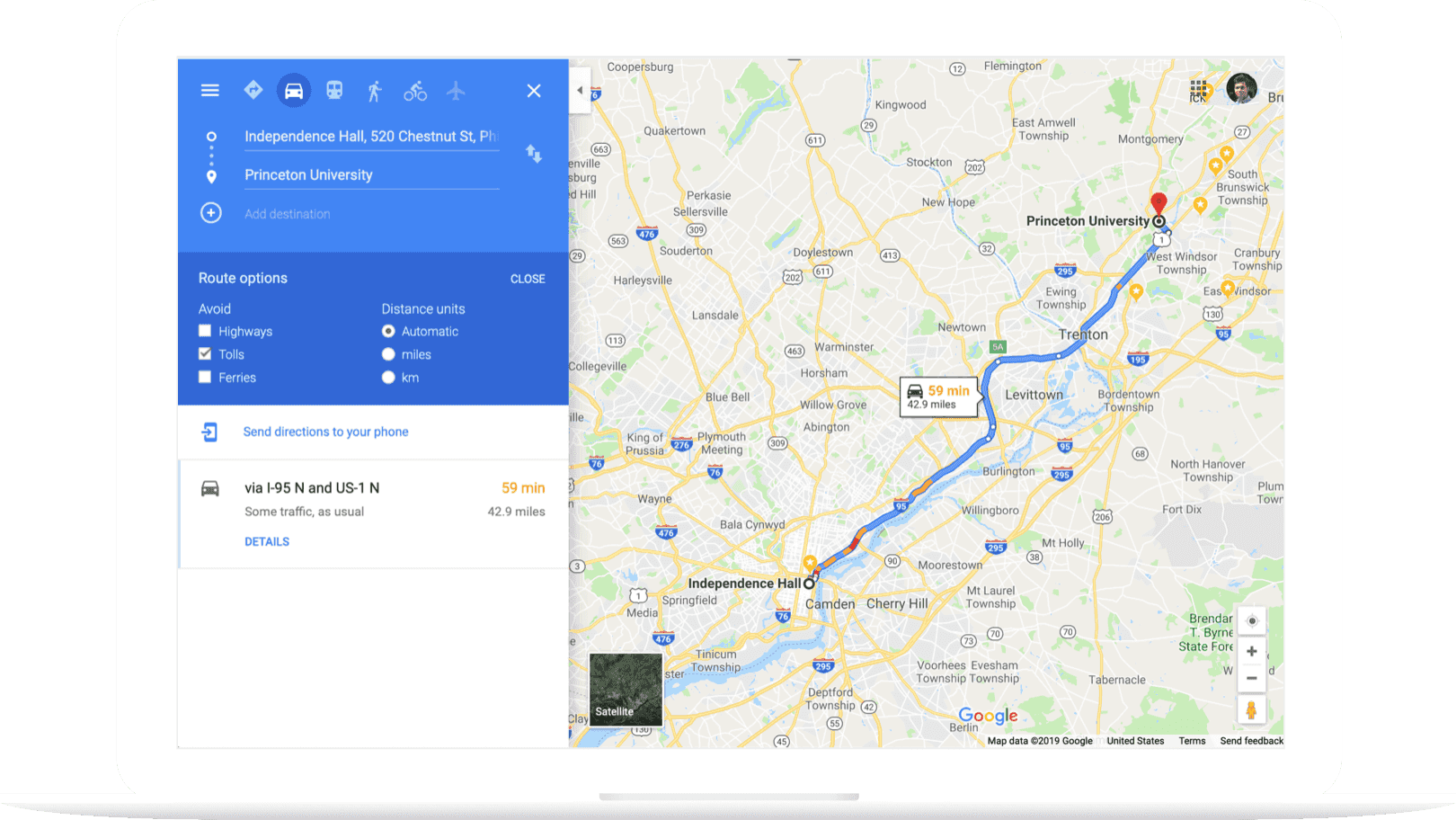

Scope For this project, the testing was limited to the Google Maps website. The tests were focused around using the website, how it helps find locations and use navigation.

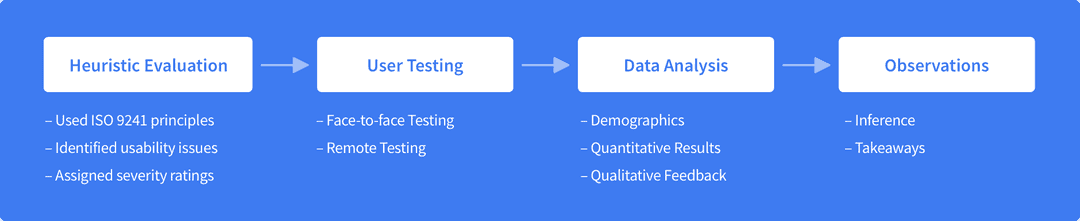

Process

We first started with heuristic evaluation to quickly assess the interface and identify where the usability issues were. We used the findings from here to create a task booklet that was then used for first, face-to-face and then remote usability tests.

In the end, the results were compiled to identify what Google Maps does well and needs improvement on.

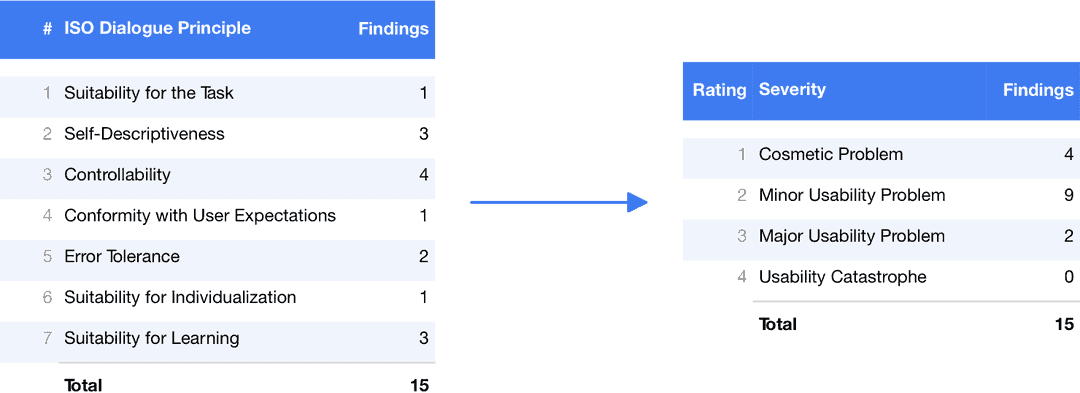

Heuristic Evaluation

- Reviewed website using ISO 9241 principles

- Identified issues and assigned severity ratings

- Aided in creating task booklet

User Testing

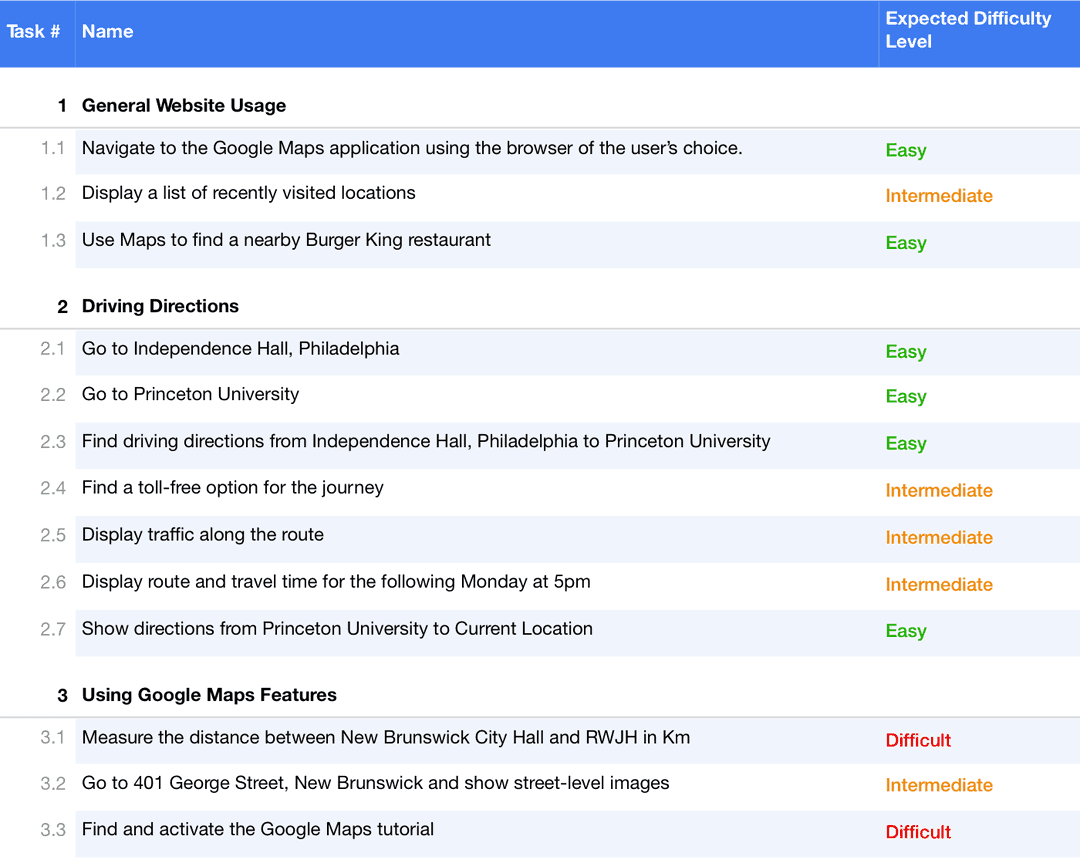

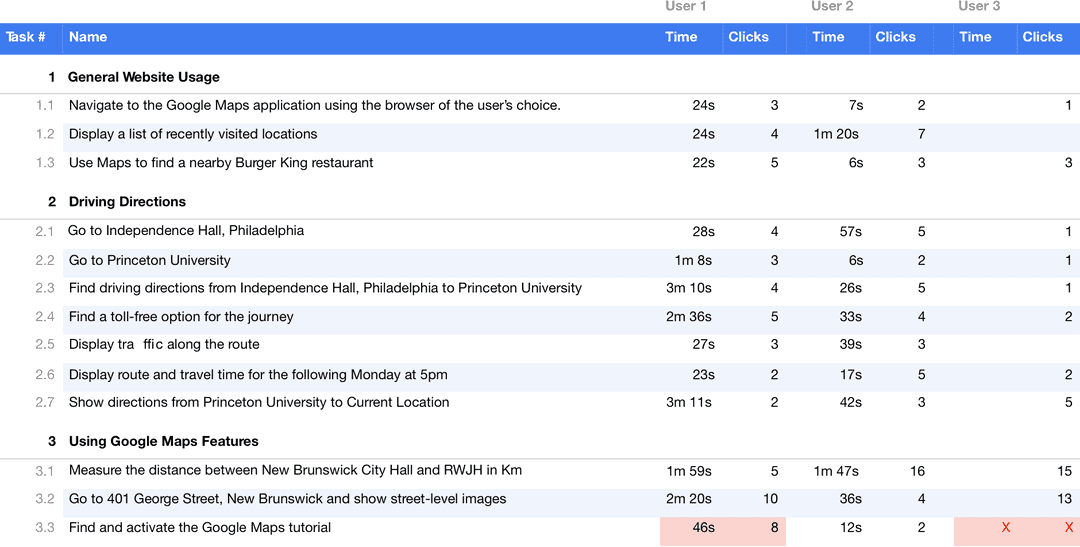

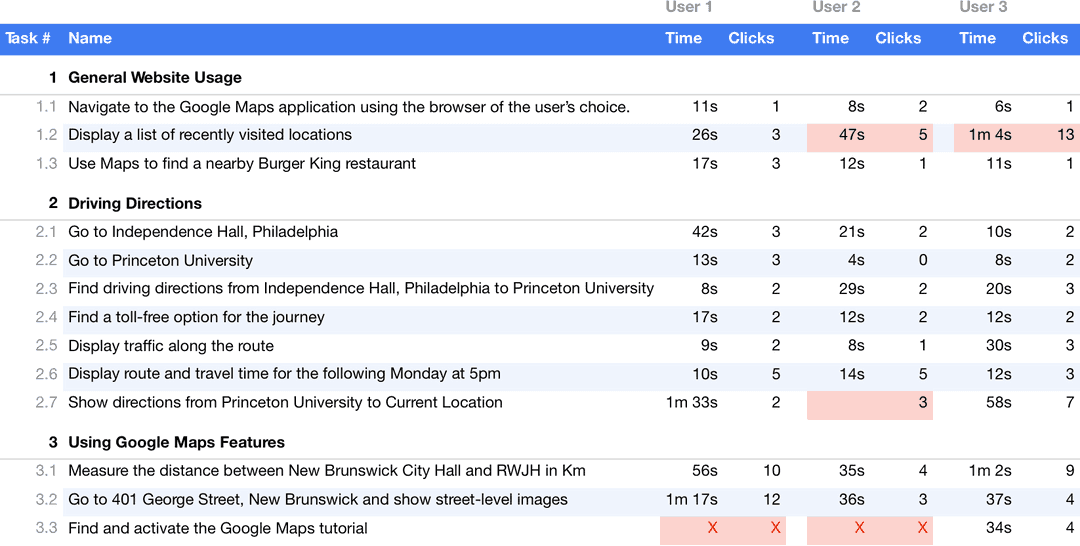

The testing was conducted by one moderator and two observers to log quantitative and qualitative data respectively. Our team of 3 took turns carrying out each task.

- 6 participants

- 30 min each

We constructed our tasks based on findings from the heuristic evaluation. We then conducted face-to-face and remote user testing.

Face-to-face testing - quantitative logs

Remote testing - quantitative logs

Based on both qualitative and quantitative findings, we discovered key areas that work well and those that need improvement.

What works

Most participants found the left-search pane most useful. Tasks involving this took them the least time and effort to complete. Here are some other areas that users had no problem interacting with.

- Search bar

- Route filters

- Recent searches

- Reverse origin and destination

Areas of opportunity

The user testing revealed 3 problem areas. They're highlighted below along with recommendations.

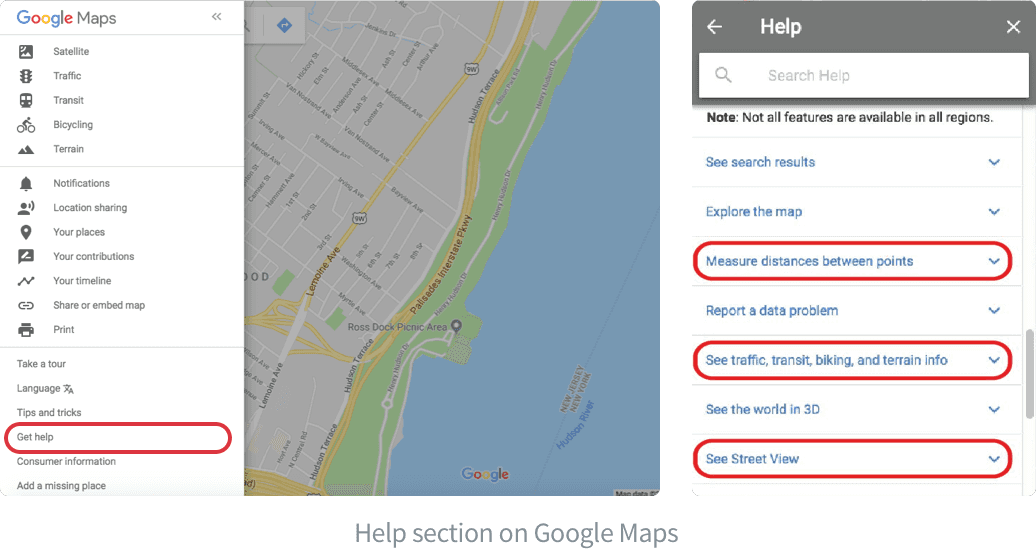

No one used Help

- No one used Get Help or Tips and Tricks when stuck on a task

- Answers to 3 of the tasks were on the first page of results

Recommendation

- Tips and Tricks could use more prominence and a descriptive name for discoverability

- The sidebar could display the most common “How do I…” help topics to help onboard new users

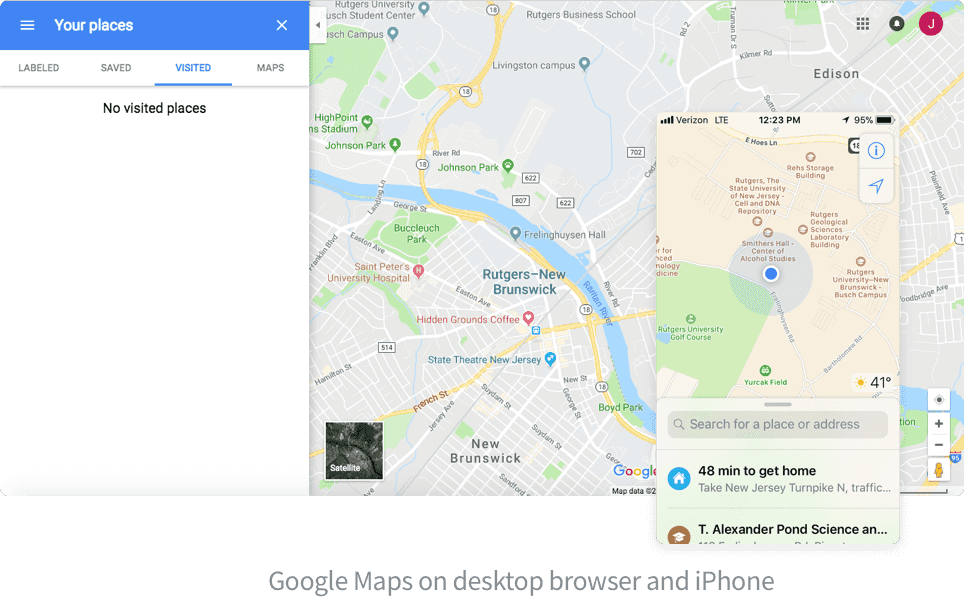

Platform inconsistency

- Users were not as familiar with the desktop version as with the native app

- Users said certain tasks easier on phone but not in browser

Recommendation

The native app could be referenced when designing for web to increase familiarity (since users were more familiar with the app).

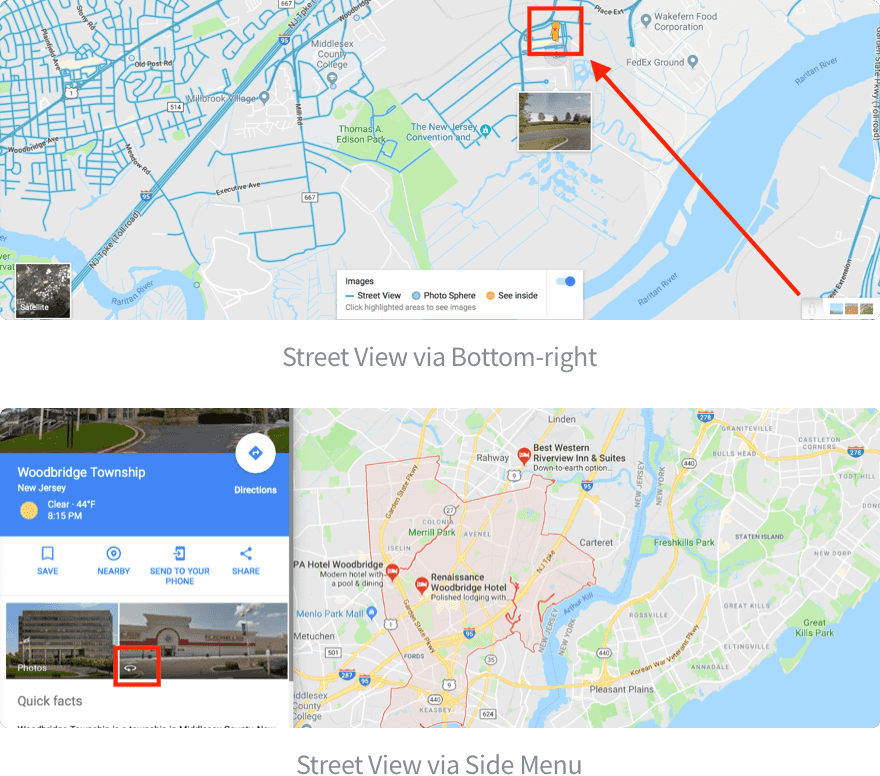

Street View inconsistency

The action had a different icon and interaction in its side menu and bottom-right placements. This impacted recognizability.

Recommendation

- Regardless of location, Street Views icon and interaction could be made consistent to improve familiarity with the feature

- When viewing a location, giving more focus on Street View could increase discoverability

Key takeaways from user testing

- Differences between desktop and mobile versions cause friction

- Each user learned something new about the interface

- Google Maps is in a very usable state but there are opportunities to improve

Personal learnings

- Heuristic evaluation helped guide task construction and test our early findings

- A moderator's ability to establish trust and comfort with the participant directly affects their performance

- Non-verbal cues could be easily picked up when in-person

- Remote testing made it harder to engage participants in active communication